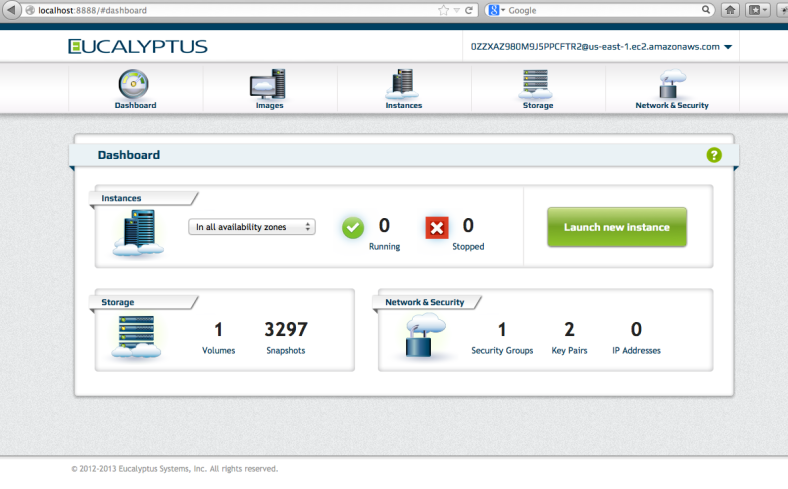

The Eucalyptus Management Console can be deployed in a variety of ways, but we’d obviously like it to be scalable, highly available and responsive. Last summer, I wrote up the details of deploying the console with Auto Scaling coupled with Elastic Load Balancing. The Cloud Formations service ties this all together by putting all of the details of how to use these services together in one template. This post will describe an example of how you can do this which works well on Eucalyptus (and AWS) and may guide you with your own application as well.

Let’s tackle a fairly simple deployment for the first round. For now, we’ll setup a LaunchConfig, AS group and ELB. We’ll also set up a security group for the AS group and allow access only to the ELB. Finally, we’ll set up a self signed SSL cert for the console. In another post, we’ll add memcached and and a cloudwatch alarm to automate scaling the console.

Instead of pasting pieces of the template here, why not open the template in another window. Under the “Resources” section, you’ll find the items I listed above. Notice “ConsoleLaunchConfig” pulls some values from the “Parameters” section such as KeyName, ImageId and InstanceType. Also uses is the “CloudIP”, but that gets included in a cloud-init script that is passed to UserData. Also, notice the SecurityGroups section that refers to the “ConsoleSecurityGroup” defined further down.

Right above that is the “ConsoleScalingGroup” which pulls in the launch config we just defined. Next “ConsoleELB” defines an ELB that listens for https traffic on 443 and talks to port 8888 on autoscaled instances. It defines a simple health check to verify the console process is running.

The “ConsoleSecurityGroup” uses attributes of the ELB to allow access only to the ELB security group on port 8888. We also allow for ssh ingress from a provided CIDR via “SSHLocation”.

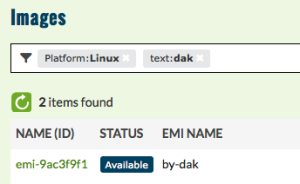

To automate deploying the console using this Cloud Formations template, I wrote a shell script to pass the required values and create the stack. At the top of the script, there are 3 values you will need to set based on your cloud. CLOUD_IP is the address for your cloud front end. SSH_KEY is the name of the Keypair you’d like to use for ssh access into the instances (if any). IMAGE_ID must be the emi-id of a CentOS 6.6 image on your cloud. There are other values you may wish to change just below that. Those are used to create a self-signed SSL certificate. This cert will be installed in your account and it’s name passed into “euform-create-stack” command along with several other values we’ve already discussed.

If you’ve run this script successfully, you can check the status of the stack by running “euform-describe-stacks console-stack”. Once completed, the output section will show the URL to use to connect to your new ELB front-end.

To adjust the number of instances in the scaling group, you can use euscale-update-auto-scaling-group –desired-capacity=N. Since the template defines max count as 3, you would need to make other adjustments for running a larger deployment.

Check back again to see how to configure a shared memcached instance and auto-scale the console.